Modern frontend testing with Vitest, Storybook, and Playwright

Mention “testing” to a frontend engineer, and you may risk triggering PTSD. It has traditionally been difficult to do and offered limited value. After all, you can see the UI right on your screen; why would you need to write automated tests to confirm what you can already observe in the browser?

In this blog post, we’ll share why we think frontend testing is worth doing, why it had a bad reputation in the past, and the approach we have taken to make our tests easy to write and maintain.

Why we test our frontend

Defined Networking’s Managed Nebula product is used by savvy home users and networking professionals to securely connect remote computers together in a way that is reliable and can scale to hundreds of thousands of hosts. As such, it is a critical piece of infrastructure for them, and we do everything possible to ensure it operates as intended. Of course, that means our backend services need to be rock solid, but a broken admin panel website could also prevent a user from making changes to their network, so we see it as a critical part of our stack as well.

Testing our UI has other less obvious benefits as well. Just as Test-Driven Development (TDD) can encourage developers to think through edge cases up-front, testing for UI components can have the same effect. Plus, by using the tools and techniques we’ll talk about later, we can ensure that essential accessibility rules are being met. Beyond all that, having an established suite of frontend tests gives us the confidence to iterate and make changes to the product quickly without worrying about unintentional regressions.

So why doesn’t everyone write UI tests?

If there are so many benefits to testing frontend code, why is it so often avoided? Unfortunately, the cost-benefit equation has been skewed heavily by the “cost” side until fairly recently.

One of the core issues is that tests typically run in CI in a node.js environment, rather than the code’s actual target environment—a browser. Developers have had to resort to mocking (faking) browser APIs using tools like jsdom, but then when a test failed, they were left wondering whether it was their code that was broken or just the mocks behaving differently than real browsers. Furthermore, the only real help testing tools could give when debugging failing UI tests was dumping out a long string of the DOM into the terminal, which can be difficult to interpret quickly. Good luck figuring out why the test failed if this is all you get:

To test frontend code in an actual browser, some teams fell back to using end-to-end testing tools like Selenium or Cypress, which load up the website in a real browser. While this does give a greater degree of confidence, such tests can be slow and brittle. They are better suited to verifying the connection between the frontend and the backend, rather than the behavior of individual components.

How Defined Networking writes frontend tests

Luckily, thanks to the efforts of many excellent open-source developers, testing frontend projects has become much easier and more valuable in the last few years. The rest of this post will discuss the four main types of tests that we use at Defined Networking and the tools we use to create them.

Unit tests (Vitest)

The term “unit test” can mean many different things. For us, it means tests for small chunks of reusable logic that live outside of components. These could be utility functions, custom React hooks, or any other code that eventually gets imported and used in a component. For these, we use Vitest as our test runner. We like that it picks up on our Vite config automatically and handles ESM dependencies without any fuss. It runs our more than 250 tests across 40 files in 6.5 seconds. Nearly half of our test files also use fast-check for property-based testing, which means we can have confidence that unexpected input will not break our code.

Component tests (Storybook)

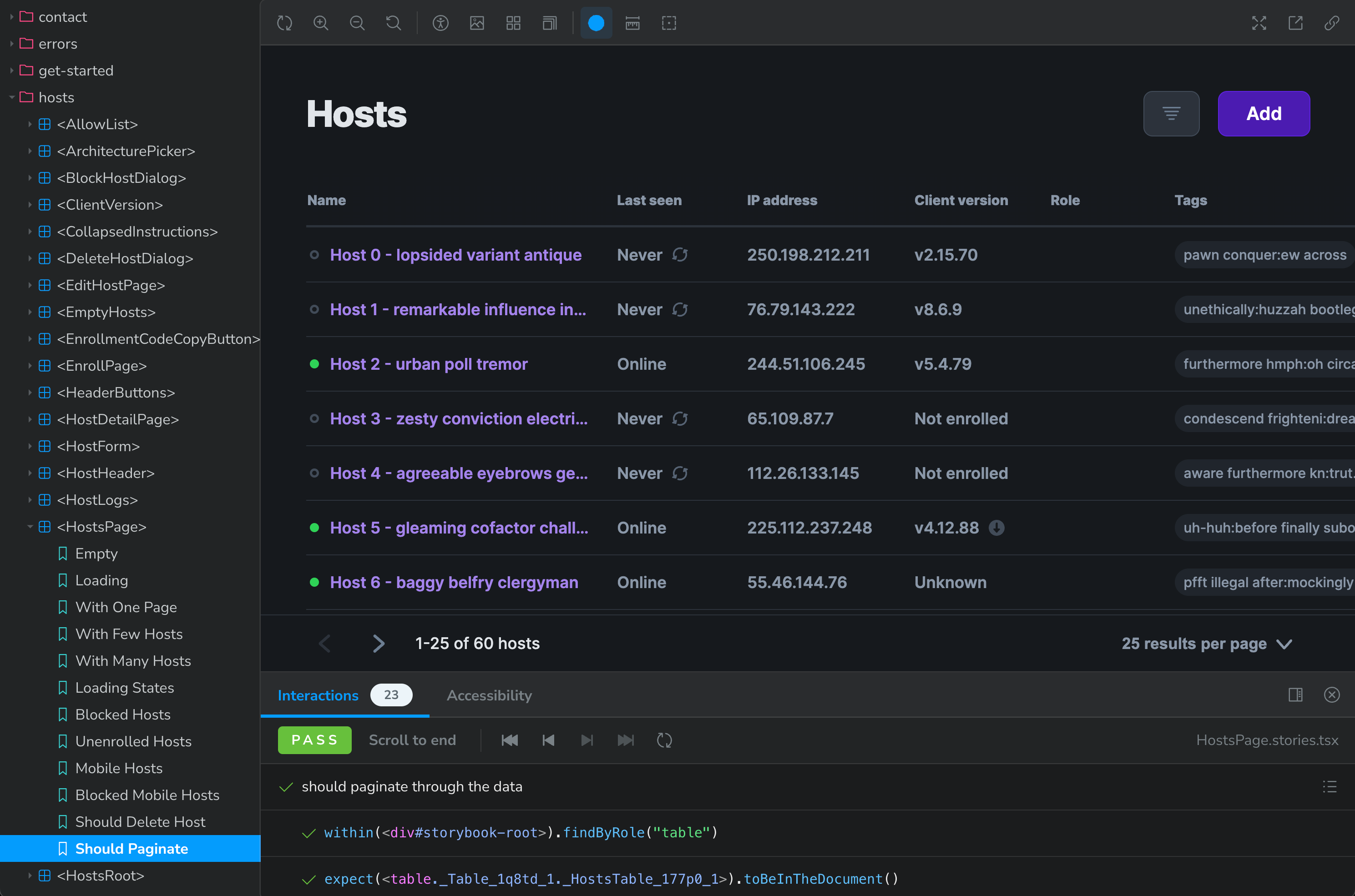

Most of what we spend our time building for the frontend of the Managed Nebula admin panel are React components. These could be small reusable components like buttons and banners, or more complex components like tables and modals, or even full pages. Even the entire application is a component made up of many other components. So it’s vital for us to have a solid strategy for testing all kinds of components, and the tool we use for this is Storybook.

Storybook is often thought of as a documentation tool. Many design libraries publish a Storybook site to list the components on offer, provide helpful tips about them, and demonstrate how they can be used. As a very small team, this isn’t our primary reason to use Storybook. Instead, we use it as an isolated environment where we can build components outside of the app, giving us a place to work on them before the rest of the page they are a part of is built. We create different “stories” for each component to reflect different states it can be in like error conditions, empty states, default states, etc. — a bit like TDD. We can even mock out API responses so that we are not waiting for the backend to send us data before we get to work on a feature.

Not long ago, Storybook also added play functions which has the ability to trigger interactions with components and assert on their behavior using the popular Testing-Library set of tools. With this, we can click on and enter text into components like a user would and then ensure that it behaves the way we expect. We’ve also found that since Testing-Library encourages selecting elements by their label or role instead of css classes, our accessibility is also improved. In CI, we can use the Storybook test-runner to execute all of our play functions and ensure that nothing has broken. We currently have more than 1,000 stories for over 200 components, and over half of the stories have interaction tests with assertions, making these the vast majority of our tests, taking about 3 minutes to run in CI split across 6 jobs. The best part is that if a test fails, we can pull it up in a browser and see what is going wrong, instead of looking only at the page’s HTML markup. By creating stories and testing components at all levels of complexity, from buttons to the entire app, we ensure that they are behaving correctly.

Visual snapshot tests (Chromatic)

The way a component behaves is indeed important, but what about how it looks? Not only can broken styles be embarrassing, but they can also cause usability problems. Styles written in CSS are notoriously easy to break accidentally, and making changes to any kind of base styles can be terrifying.

I just changed the default margin of paragraphs, but did I remember to check all of the edge cases of all of the dusty, rarely-visited corners of my app, like onboarding and error states?

Luckily, as you’ve seen above, we have Storybook stories for each of the dusty corners and edge cases, so we can take a picture of each story when it is created and then again any time something changes, comparing them to look for differences. It’s possible to build a home-grown system to do this, and there are a number of services that will do it for a monthly fee. We chose to use Chromatic, the developers of Storybook itself. These visual snapshot tests have saved us from shipping visual bugs several times and have given us the confidence to, for example, change the default bottom spacing on all heading elements without worrying that we would make an obscure page in the app look broken.

Here’s an example of a recent change that Chromatic flagged in a refactoring PR which kept us from shipping a broken styling change (shown in green):

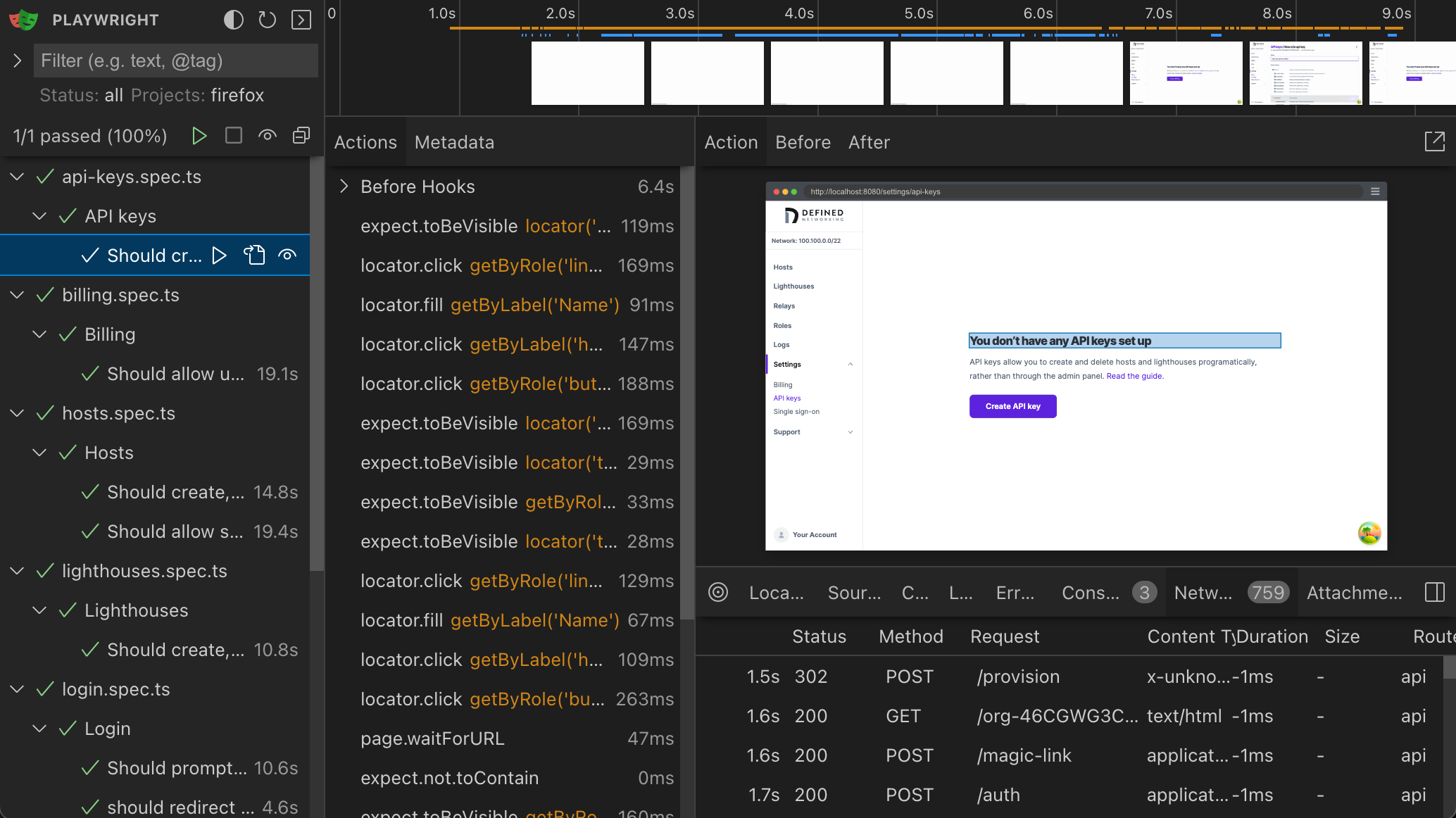

End-to-end tests (Playwright)

Finally, at the top of our testing stack come end-to-end (e2e) tests, which verify that our deployed application is working together with the deployed database and API server. All of our other tests use fake data to put components into particular states and avoid making potentially slow and unreliable network requests. However, this means we need to double-check that the fake responses match the actual behavior of the real server. For that reason, we write a handful of tests that exercise everything from our signup and authentication flow (using real magic-link emails and two-factor authentication) to host creation and deletion to plan upgrades. These do not need to test how our components behave in different situations (that is already covered by our component tests), but rather, they test how the website interacts with the backend in order to achieve the goals of a user. For these tests, we use Playwright, which launches headless versions of multiple browsers. If a test fails in CI, we get a debugging trace that shows the state of the page at each step of the test, which we find helpful in determining why the test failed. In addition to running these tests each time we make a change to the frontend, we also run them overnight to catch any unintentional breaking changes made by the API server in our staging environment before it is deployed to production.

Honorable mention (static analysis)

While not always thought of as “testing,” there is another class of tooling that also helps us avoid shipping broken code. We use static analysis tools, including linters (ESLint and Stylelint), which enforce best practices and help avoid certain kinds of bugs, and we write our code using TypeScript, which adds type support onto JavaScript, giving us confidence that we are not making typos in the code or calling methods that don’t actually exist.

Wrap-up

We take frontend development seriously here at Defined Networking, and through a combination of unit, component, visual snapshot, and end-to-end tests, we do our best to ship high-quality, smooth-functioning interfaces to our users. Open source tools like Vitest, fast-check, Storybook, and Playwright are crucial for our testing strategy. In fact, we contribute to the development of many of these tools through code submissions and monthly financial support. After all, we are a company built on top of open source software: Nebula.

If you have any questions or thoughts about anything we’ve discussed here, please don’t hesitate to reach out to us, and we’ll be thrilled to talk.

Nebula, but easier

Take the hassle out of managing your private network with Defined Networking, built by the creators of Nebula.